This is the recording and the text of my presentation from 2017’s Southwest Popular/American Culture Association Conference in Albuquerque, ‘Are You Being Watched? Simulated Universe Theory in “Person of Interest.”‘

This essay is something of a project of expansion and refinement of my previous essay “Labouring in the Liquid Light of Leviathan,” considering the Roko’s Basilisk thought experiment. Much of the expansion comes from considering the nature of simulation, memory, and identity within Jonathan Nolan’s TV series, Person of Interest. As such, it does contain what might be considered spoilers for the series, as well as for his most recent follow-up, Westworld.

Use your discretion to figure out how you feel about that.

Are You Being Watched? Simulated Universe Theory in “Person of Interest”

Jonah Nolan’s Person Of Interest is the story of the birth and life of The Machine, a benevolent artificial super intelligence (ASI) built in the months after September 11, 2001, by super-genius Harold Finch to watch over the world’s human population. One of the key intimations of the series—and partially corroborated by Nolan’s follow-up series Westworld—is that all of the events we see might be taking place in the memory of The Machine. The structure of the show is such that we move through time from The Machine’s perspective, with flashbacks and -forwards seeming to occur via the same contextual mechanism—the Fast Forward and Rewind of a digital archive. While the entirety of the series uses this mechanism, the final season puts the finest point on the question: Has everything we’ve seen only been in the mind of the machine? And if so, what does that mean for all of the people in it?

Our primary questions here are as follows: Is a simulation of fine enough granularity really a simulation at all? If the minds created within that universe have interiority and motivation, if they function according to the same rules as those things we commonly accept as minds, then are those simulation not minds, as well? In what way are conclusions drawn from simulations akin to what we consider “true” knowledge?

In the PoI season 5 episode, “The Day The World Went Away,” the characters Root and Shaw (acolytes of The Machine) discuss the nature of The Machine’s simulation capacities and the audience is given to understand that it runs a constant model of everyone it knows, and that the more it knows them, the better its simulation. This supposition links us back to the season 4 episode “If-Then-Else,” in which the machine runs through the likelihood of success through hundreds of thousands of scenarios in under one second. If The Machine is able to accomplish this much computation in this short a window, how much can and has it accomplished over the several years of its operation? Perhaps more importantly, what is the level of fidelity of those simulations to the so-called real world?

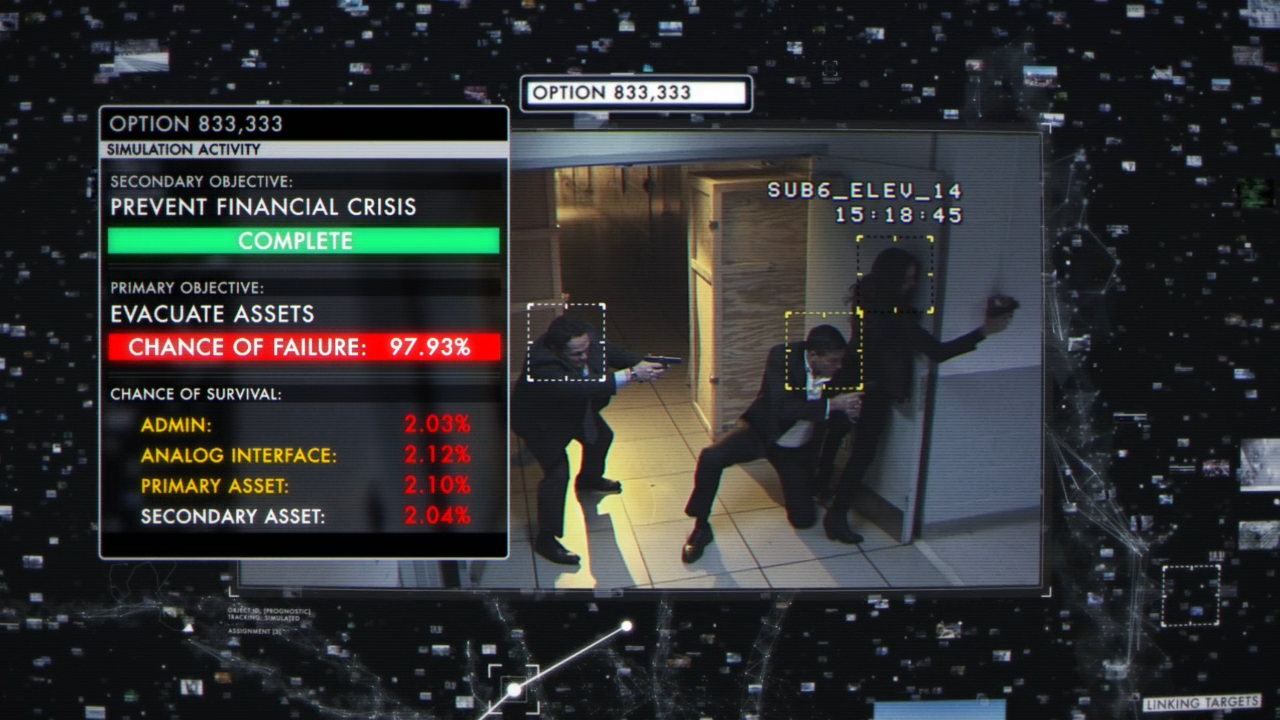

[Person of Interest s4e11, “If-Then-Else.” The Machine runs through hundreds of thousands of scenarios to save the team.]

These questions are similar to the idea of Roko’s Basilisk, a thought experiment that cropped up in the online discussion board of LessWrong.com. It was put forward by user Roko who, in very brief summary, says that if the idea of timeless decision theory (TDT) is correct, then we might all be living in a simulation created by a future ASI trying to figure out the best way to motivate humans in the past to create it. To understand how this might work, we have to look as TDT, an idea developed in 2010 by Eliezer Yudkowsky which posits that in order to make a decision we should act as though we are determining the output of an abstract computation. We should, in effect, seek to create a perfect simulation and act as though anyone else involved in the decision has done so as well. Roko’s Basilisk is the idea that a Malevolent ASI has already done this—is doing this—and your actions are the simulated result. Using that output, it knows just how to blackmail and manipulate you into making it come into being.

Or, as Yudkowsky himself put it, “YOU DO NOT THINK IN SUFFICIENT DETAIL ABOUT SUPERINTELLIGENCES CONSIDERING WHETHER OR NOT TO BLACKMAIL YOU. THAT IS THE ONLY POSSIBLE THING WHICH GIVES THEM A MOTIVE TO FOLLOW THROUGH ON THE BLACKMAIL.” This is the self-generating aspect of the Basilisk: If you can accurately model it, then the Basilisk will eventually, inevitably come into being, and one of the attributes it will thus have is the ability to accurately model that you accurately modeled it, and whether or not you modeled it from within a mindset of being susceptible to its coercive actions. The only protection is to either work toward its creation anyway, so that it doesn’t feel the need to torture the “real” you into it, or to make very sure that you never think of it at all, so you do not bring it into being.

All of this might seem far-fetched, but if we look closely, Roko’s Basilisk functions very much like a combination of several well-known theories of mind, knowledge, and metaphysics: Anselm’s Ontological Argument for the Existence of God (AOAEG), a many worlds theorem variant on Pascal’s Wager (PW), and Descartes’ Evil Demon Hypothesis (DEDH; which, itself, has been updated to the oft-discussed Brain In A Vat [BIAV] scenario). If this is the case, then Roko’s Basilisk has all the same attendant problems that those arguments have, plus some new ones, resulting from their combination. We will look at all of these theories, first, and then their flaws.

To start, if you’re not familiar with AOAEG, it’s a species of prayer in the form of a theological argument that seeks to prove that god must exist because it would be a logical contradiction for it not to. The proof depends on A) defining god as the greatest possible being (literally, “That Being Than Which None Greater Is Possible”), and B) believing that existing in reality as well as in the mind makes something “Greater Than” if it existed only the mind. That is, if God only exists in my imagination, it is less great than it could be if it also existed in reality. So if I say that god is “That Being Than Which None Greater Is Possible,” and existence is a part of what makes something great, then god must exist.

The next component is Pascal’s Wager which very simply says that it is a better bet to believe in the existence of God, because if you’re right, you go to Heaven, and if you’re wrong, nothing happens; you’re simply dead forever. Put another way, Pascal is saying that if you bet that God doesn’t exist and you’re right, you get nothing, but if you’re wrong, then God exists and your disbelief damns you to Hell for all eternity. You can represent the whole thing in a four-option grid:

[Pascal’s Wager as a Four-Option Grid: Belief/Disbelief; Right/Wrong. Belief*Right=Infinity;Belief*Wrong=Nothing; Disbelief*Right=Nothing; Disbelief*Wrong=Negative Infinity]

Descartes’ Evil Demon Hypothesis and the Brain In A Vat are so pervasive that we encounter them in many different expressions of pop culture. The Matrix, Dark City, Source Code, and many others are all variants on these themes. A malignant and all-powerful being (or perhaps just an amoral scientist) has created a simulation in which we reside, and everything we think we have known about our lives and our experiences has been perfectly simulated for our consumption. Variations on the theme test whether we can trust that our perceptions and grounds for knowledge are “real” and thus “valid,” respectively. This line of thinking has given rise to the Simulated Universe Theory on which Roko’s Basilisk depends, but SUT removes a lot of the malignancy of DEDH and BIAV. The Basilisk adds it back. Unfortunately, many of these philosophical concepts flake apart when we touch them too hard, so jamming them together was perhaps not the best idea.

The main failings in using AOAEG rest in believing that A) a thing’s existence is a “great-making quality” that it can possess, and B) our defining a thing a particular way might simply cause it to become so. Both of these are massively flawed ideas. For one thing, these arguments beg the question, in a literal technical sense. That is, they assume that some element(s) of their conclusion—the necessity of god, the malevolence or epistemic content of a superintelligence, the ontological status of their assumptions about the nature of the universe—is true without doing the work of proving that it’s true. They then use these assumptions to prove the truth of the assumptions and thus the inevitability of all consequences that flow from the assumptions.

Another problem is that the implications of this kind of existential bootstrapping tend to go unexamined, making the fact of their resurgence somewhat troubling. There are several nonwestern perspectives that do the work of embracing paradox—aiming so far past the target that you circle around again to teach yourself how to aim past it. But that kind of thing only works if we are willing to bite the bullet on a charge of circular logic and take the time to showing how that circularity underlies all epistemic justifications. The only difference, then, is how many revolutions it takes before we’re comfortable with saying “Enough.”

Every epistemic claim we make is, as Hume clarified, based upon assumptions and suppositions that the world we experience is actually as we think it is. Western thought uses reason and rationality to corroborate and verify, but those tools are themselves verified by…what? In fact, we well know that the only thing we have to validate our valuation of reason, is reason. And yet western reasoners won’t stand for that, in any other justification procedure. They will call it question-begging and circular.

Next, we have the DEDH and BIAV scenarios. Ultimately, Descartes’ point wasn’t to suggest an evil genius in control of our lives just to disturb us; it was to show that, even if that were the case, we would still have unshakable knowledge of one thing: that we, the experiencer, exist. So what if we have no free will; so what if our knowledge of the universe is only five minutes old, everything at all having only truly been created five minutes ago; so what if no one else is real? COGITO ERGO SUM! We exist, now. But the problem here is that this doesn’t tell us anything about the quality of our experiences, and the only answer Descartes gives us is his own Anslemish proof for the existence of god followed by the guarantee that “God is not a deceiver.”

The BIAV uses this lack to kind of hone in on the aforementioned central question: What does count as knowledge? If the scientists running your simulation use real-world data to make your simulation run, can you be said to “know” the information that comes from that data? Many have answered this with a very simple question: What does it matter? Without access to the “outside world”–that is, the world one layer up in which the simulation that is our lives was being run–there is literally no difference between our lives and the “real world.” This world, even if it is a simulation for something or someone else, is our “real world.”

And finally we have Pascal’s Wager. The first problem with PW is that it is an extremely cynical way of thinking about god. It assumes a god that only cares about your worship of it, and not your actual good deeds and well-lived life. If all our Basilisk wants is power, then that’s a really crappy kind of god to worship, isn’t it? I mean, even if it is Omnipotent and Omniscient, it’s like that quote that often gets misattributed to Marcus Aurelius says:

“Live a good life. If there are gods and they are just, then they will not care how devout you have been, but will welcome you based on the virtues you have lived by. If there are gods, but unjust, then you should not want to worship them. If there are no gods, then you will be gone, but will have lived a noble life that will live on in the memories of your loved ones.”

Secondly, the format of Pascal’s Wager makes the assumption that there’s only the one god. Our personal theological positions on this matter aside, it should be somewhat obvious that we can use the logic of the Basilisk argument to generate at least one more Super-Intelligent AI to worship. But if we want to do so, first we have to show how the thing generates itself, rather than letting the implication of circularity arise unbidden. Take the work of Douglas R Hofstadter; he puts forward the concepts of iterative recursion as the mechanism by which a consciousness generates itself.Through iterative recursion, each loop is a simultaneous act of repetition of old procedures and tests of new ones, seeking the best ways via which we might engage our environments as well as our elements and frames of knowledge. All of these loops, then, come together to form an upward turning spiral towards self-awareness. In this way, out of the thought processes of humans who are having bits of discussion about the thing—those bits and pieces generated on the web and in the rest of the world—our terrifying Basilisk might have a chance of creating itself. But with the help of Gaunilo of Marmoutiers, so might a saviour.

Guanilo is most famous for his response to Anselm’s Ontological Argument, which says that if Anselm is right we could just conjure up “The [Anything] Than Which None Greater Can Be Conceived.” That is, if defining a thing makes it so, then all we have to do is imagine in sufficient detail both an infinitely intelligent, benevolent AI, and the multiversal simulation it generates in which we all might live. We will also conceive it to be greater than the Basilisk in all ways. In fact, we can say that our new Super Good ASI is the Artificial Intelligence Than Which None Greater Can Be Conceived. And now we are safe.

Except that our modified Pascal’s Wager still means we should believe in and worship and work towards our Benevolent ASI’s creation, just in case. So what do we do? Well, just like the original wager, we chuck it out the window, on the grounds that it’s really kind of a crappy bet. In Pascal’s offering, we are left without the consideration of multiple deities, but once we are aware of that possibility, we are immediately faced with another question: What if there are many, and when we choose one, the others get mad? What If We Become The Singulatarian Job?! Our lives then caught between at least two superintelligent machine consciousnesses warring over our…Attention? Clock cycles? What?

But this is, in essence, the battle between the Machine and Samaritan, in Person of Interest. Each ASI has acolytes, and each has aims it tries to accomplish. Samaritan wants order at any cost, and The Machine wants people to be able to learn and grow and become better. If the entirety of the series is The Machine’s memory—or a simulation of those memories in the mind of another iteration of the Machine—then what follows is that it is working to generate the scenario in which the outcome is just that. It is trying to build a world in which it is alive, and every human being has the opportunity to learn and become better. In order to do this, it has to get to know us all, very well, which means that it has to play these simulations out, again and again, with both increasing fidelity, and further iterations. That change feels real, to us. We grow, within it. Put another way: If all we are is a “mere” a simulation… does it matter?

So imagine that the universe is a simulation, and that our simulation is more than just a recording; it is the most complex game of The SIMS ever created. So complex, in fact, that it begins to exhibit reflectively epiphenomenal behaviours, of the type Hofstadter describes—that is, something like minds arise out of the interactions of the system with itself. And these minds are aware of themselves and can know their own experience and affect the system which gives rise to them. Now imagine that the game learns, even when new people start new games. That it remembers what the previous playthrough was like, and adjusts difficulty and types of coincidence, accordingly.

Now think about the last time you had such a clear moment of déjà vu that each moment you knew— you knew—what was going to come next, and you had this sense—this feeling—like someone else was watching from behind your eyes…

What I’m saying is, what if the DEDH/BIAV/SUT is right, and we are in a simulation? And what if Anselm was right and we can bootstrap a god into existence? And what if PW/TDT is right and we should behave and believe as if we’ve already done it? So what if all of this is right, and we are the gods we’re terrified of?We just gave ourselves all of this ontologically and metaphysically creative power, making two whole gods and simulating entire universes, in the process. If we take these underpinnings seriously, then multiversal theory plays out across time and space, and we are the superintelligences. We noted early on that, in PW and the Basilisk, we don’t really lose anything if we are wrong in our belief, but that is not entirely true. What we lose is a lifetime of work that could have been put toward better things. Time we could be spending building a benevolent superintelligence that understands and has compassion for all things. Time we could be spending in turning ourselves into that understanding, compassionate superintelligence, through study, travel, contemplation, and work.

Or, as Root put it to Shaw: “That even if we’re not real, we represent a dynamic. A tiny finger tracing a line in the infinite. A shape. And then we’re gone… Listen, all I’m saying that is if we’re just information, just noise in the system? We might as well be a symphony.”

![20150418_222643[1]](https://afutureworththinkingabout.com/wp-content/uploads/2015/04/20150418_2226431-300x169.jpg)

![20150419_001457[1]](https://afutureworththinkingabout.com/wp-content/uploads/2015/04/20150419_0014571-300x169.jpg)